Of the many things we learned at the Sundance New Frontier program VR exhibits, virtual reality is both astonishingly real and at times terribly awkward. The hyper-realism is right in front of you – grab a VR headset and open your eyes. But try to manipulate objects in the virtual environment and you can be as awkward as a two-year old. Even with sensors wrapped around your hands, handling objects can quickly break the realism of virtual reality.

At SXSW last year, Palmer Luckey of Oculus said that touch was the “Holy Grail” of VR. It’s a difficult challenge and even Oculus has delayed its Touch controller. It’s now scheduled for the latter part of 2016.

Two VR examples from Sundance

For an example of what we mean, take a look at two VR installations at Sundance. The first is the astonishingly beautiful HTC Vive VR experience The Blue: Encounter. You find yourself standing on the deck of a sunken ship, small fish swimming around you. Out of the watery depth, an 80 foot whale slowly glides up by your side. It is absolutely breathtaking. You feel you are encountering a life-like creature that you could reach out and touch. As it swims off, you feel the need to duck to avoid the course of its tail.

But in another whale-themed installation, The Leviathan Project, you are standing in a virtual laboratory and asked to move levers and objects around a table. It’s part of a larger interactive narrative, and the objects are fairly easy to grasp.

But from our experience – and in watching others – most participants needed help in placing the objects in the right slots. It wasn’t impossible to do, but it should have been much easier.

How to make Virtual Reality real

The Leviathan Project illustrates a fundamental problem in virtual reality. The illusion of immersion cracks as soon as we try and pick up an object. The new hand controllers from Oculus and HTC will help. But other solutions are in the works that will profoundly change our virtual experiences.

Rachael Metz examines the issue in an article in the MIT Technology Review, “The Step Needed to Make Virtual Reality More Real.” Three different tracking approaches are in development – head, eyes and your hands. The eye and hand tracking are what interests us the most as they’re the most difficult to solve.

Using your hands

Metz describes a motion-sensing hand device by the startup Gest that tracks the movement of your individual fingers:

A startup called Gest is trying to take advantage of another body part: your fingers. The San Francisco startup is building a motion-sensor-filled device that wraps around your palm and includes rings that slide around four of your fingers. The company plans to roll out its gadget in November and will give developers tools to make Gest work with virtual reality and other applications.

The Gest device is already small and you can see it developing into nothing more than a thin pair of gloves in the near future.

Using your eyes

The second device is by Eyefluence which is creating eye-tracking technology that lets you manipulate objects just by looking:

The company has developed a flexible circuit that holds a camera, illumination sources, and other tiny bits of hardware. It is meant to fit around a small metal chassis that the company hopes to have embedded in future virtual-reality headsets.

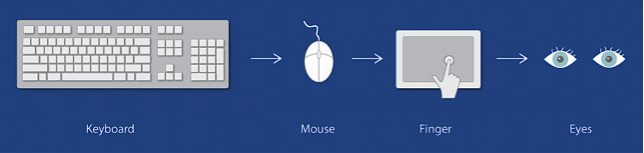

It sees itself as the next logical step in our interaction with technology – from keyboards, to mice, to touchpads, to controlling the digital environment through our gaze. Eyefluence wants to embed the technology in our VR headsets.

[UPDATE: Eyefluence was bought by Google in late 2016. We expect to see its technology reappear in Google VR headsets in the future. – April 24, 2017]

Touch the future

For VR to fully develop as an entertainment medium and learning resource, we will need to interact with objects as easily as we do in real life.

What would happen if you could combine the above technologies with the research of Ultrahaptics? The latter company wants to use an array of ultrasound emitters to simulate objects and feelings:

. . . tiny bubbles bursting on your fingertips, a stream of liquid passing over your hand, the outlines of three-dimensional shapes.

That starts to sound like something out of the Holodeck in Star Trek. But get ready as it’s exactly where we’re headed.

Emory Craig is a writer, speaker, and consultant specializing in virtual reality (VR) and artificial intelligence (AI) with a rich background in art, new media, and higher education. A sought-after speaker at international conferences, he shares his unique insights on innovation and collaborates with universities, nonprofits, businesses, and international organizations to develop transformative initiatives in XR, AI, and digital ethics. Passionate about harnessing the potential of cutting-edge technologies, he explores the ethical ramifications of blending the real with the virtual, sparking meaningful conversations about the future of human experience in an increasingly interconnected world.