If the weekend didn’t give us enough chaos at OpenAI, the turmoil continues this week as the AI startup grapples with its future – or even if it has a future.

A quick recap: OpenAI’s board fired CEO Sam Altman on Friday afternoon in a shocking move. President and co-founder Greg Brockman was demoted and then quit the same day. By that time, several prominent AI researchers resigned in support of Altman. Over the weekend, investors led by Microsoft Corp. and Thrive Capital worked behind the scenes to negotiate Altman’s return as CEO, and he met with board members on Sunday. Altman tweeted a picture of himself wearing a guest badge, saying it would be the “first and last time” he wore it, implying that he would either be the CEO or never step foot in the building again.

Talks broke down by Sunday night, and just hours later, both he and Greg Brockman were joining Microsoft to head a new advanced AI research lab. Staff were seen leaving OpenAI’s headquarters Sunday night visibly distraught as the company’s future hung in the balance.

No one could have expected the developments two weeks ago when Sam Altman walked out on stage at OpenAI’s DevDay to announce new products and developments. The company has now gone through three CEOs in three days. That might be a record for a company with the fastest-growing tech product on the market.

It all makes the classic Silicon Valley story of Steve Jobs being forced out of Apple look like a children’s book in comparison. At least the latter had a happy ending with Jobs returning to – and transforming – Apple.

The Chaos at OpenAI Continues

The latest developments today make you wonder how OpenAI ever functioned as a going concern. Here are the latest developments:

New Interim CEO

Sunday night, OpenAI announced that Emmett Shear, the former chief executive of the live-streaming site Twitch, would be taking over as Interim CEO. That might seem a curious choice, except that he has embraced a form of “AI doomerism.” As The NYTimes noted,

But in interviews and on social media, Mr. Shear has articulated a view about the risks of artificial intelligence that could appeal to the board members of OpenAI, who pushed out Mr. Altman at least in part over their fears that he was not paying enough attention to the potential threat posed by the company’s technology.

While it might seem like a good fit for OpenAI’s board, it’s hard to see how this benefits either OpenAI or artificial intelligence itself. The rest of the AI community isn’t going to slow down just because OpenAI decides to. But the decision is a “stinging rebuke” (Time) to the investors and employees who pushed for Altman’s return.

And staff had their own sting rebuke for Shear. Most simply did not attend the all-hands-on video meeting designed to introduce him as OpenAI’s new CEO.

OpenAI’s Staff Threaten to Leave

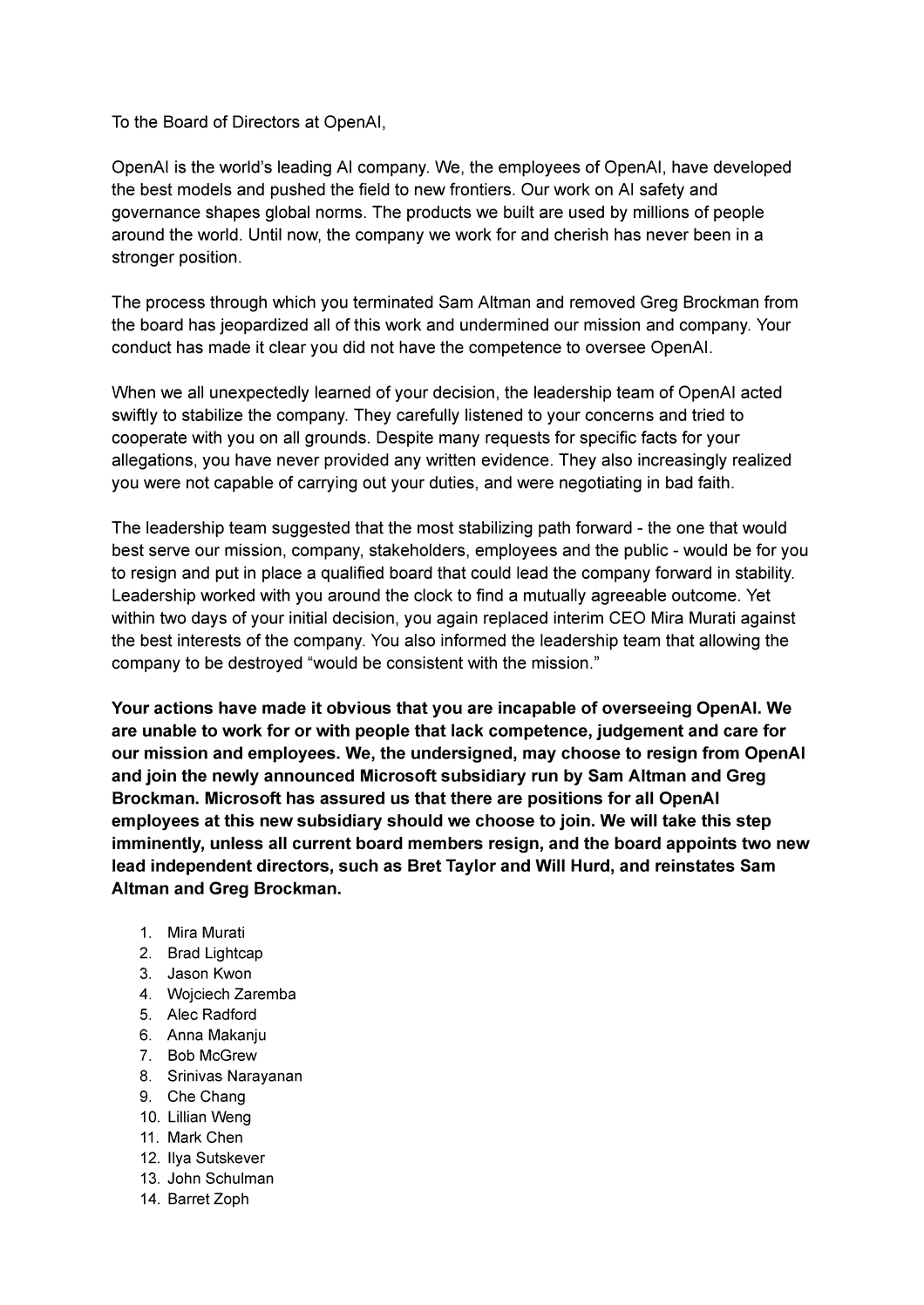

Today, over 700 of the 770 staff at OpenAI issued a public letter threatening to resign if Sam Altman does not return as CEO. Here is their letter to the board:

With the overwhelming majority of the staff signing the letter, this could mark the end of OpenAI. And Microsoft is essentially inviting them all to join Sam and Greg in their new AI research lab.

What In The World Was Ilya Sutskever Thinking?

While we don’t know all the details of Sam Altman’s shock termination, it is clear that Ilya Sutskever coordinated it with the other board members. Did he completely misjudge the reaction of Microsoft and OpenAI staff to Altman’s termination? Indeed, he failed to foresee the resulting turmoil and chaos at OpenAI.

On Sunday, Sutskever, with the remaining members of OpenAI’s board, released a statement saying that,

[The board] stood by its decision as the only path to advance and defend the mission of OpenAI.

But by Monday morning, Ilya Sutskever had signed the above letter demanding that the board – of which he is the most prominent member – resign.

We told you this is getting wild – the board member who orchestrated the CEO’s termination now joins with other staff demanding that the board resign.

If that’s not enough, we woke up to the following tweet from Ilya Sutskever on Monday morning:

Underlying the Chaos at OpenAI

We hear there is still a move to bring Sam Altman back as CEO, so it’s impossible to draw any firm conclusions here. According to The Information, OpenAI executives will continue discussions with Sam Altman, interim CEO Emmett Shear, and the board of directors on Tuesday morning as they try to save OpenAI – their word was “reunify” the company.

As we wait to hear of further developments, here are a couple of thoughts on where we are on Monday night, November 20th.

First, this entire saga is not about a leadership shakeup or even the implosion of the most well-known AI startup in Silicon Valley. Underlying the events here are profound disagreements over the path we take to artificial general intelligence (AGI).

The chaos at OpenAI isn’t following the trajectory of any traditional Silicon Valley tech startup. OpenAI is a nonprofit organization with a complex structure that incorporates a for-profit unit within it. Timothy B. Lee writes in Understanding AI,

When Musk and Altman unveiled OpenAI, they also painted the project as a way to neutralize the threat of a malicious artificial super-intelligence,” Cade Metz wrote in a 2016 Wired profile of the OpenAI. “Of course, that super-intelligence could arise out of the tech OpenAI creates, but they insist that any threat would be mitigated because the technology would be usable by everyone.”

Nick Bostrom, the philosopher whose 2014 book inspired Musk and Altman’s concerns, didn’t find this convincing at all. “If you have a button that could do bad things to the world, you don’t want to give it to everyone,” Bostrom told Wired in 2016.

Tensions over this question have dogged the organization ever since. They ratcheted up in recent years as OpenAI raised money to build and commercialize more capable models. And they finally boiled over with Friday’s decision to fire Altman.

The drama and chaos at OpenAI this weekend underscores the delicate balance between responsible AI development and the economic realities of the AI industry. Sam Altman and Ilya Sutskever never disagreed over the challenges we face as AI becomes increasingly powerful and the need for ethical guidelines and AI regulation. They disagreed on the route we must take to get to ethical AGI.

As Alberto Romero noted,

For most people, Altman and Sutskever are on the same page. It’s clear that the latter, at least, doesn’t agree with that. What’s unclear is who is right: Can you build AGI without a strong product roadmap? Is it safer to do it behind closed doors rather than following a process of iterative deployment — or more open still, as Meta is doing with the Llama family of models? Can anyone get to AGI without satisfying, at the same time, the economic requirements of the shareholders and investors?

That disagreement resulted in Sam Altman’s termination and the current chaos at OpenAI. Tragically, the result may be precisely what Ilya Sutskever didn’t want – more rapid development of AI products in the hands of large tech companies such as Microsoft, where ethics will never be as front and center as they were at OpenAI. Ilya Sutskever may have destroyed the very thing that was most important to him – a nonprofit AI organization where ethical considerations guided the development of AI and the long-term goal of AGI.

For the moment, at least, Sam Altman comes off looking like the hero, Ilya Sutskever as the failed tragic character, and Microsoft’s Satya Nadella steps in to pick up the spoils of the battle. How history will see the events this weekend is anyone’s guess. Perhaps AGI will rewrite the story to suit its own needs in the future – you never know.

We have a feeling there is still more to come in this saga, and we will continue to update as we learn more.

Emory Craig is a writer, speaker, and consultant specializing in virtual reality (VR) and artificial intelligence (AI) with a rich background in art, new media, and higher education. A sought-after speaker at international conferences, he shares his unique insights on innovation and collaborates with universities, nonprofits, businesses, and international organizations to develop transformative initiatives in XR, AI, and digital ethics. Passionate about harnessing the potential of cutting-edge technologies, he explores the ethical ramifications of blending the real with the virtual, sparking meaningful conversations about the future of human experience in an increasingly interconnected world.