AR glasses are still a ways off. There’s the challenge of packing hardware and a battery into the thin frame of our eyewear. Even more, there’s the technical issues in making objects in augmented reality appear realistic.

It turns out that Microsoft has been working on this. In a paper for SIGGRAPH 2017, their research team offers a glimpse of where we’re headed. The end goal is what we are all striving for – AR glasses that match the realism of human vision.

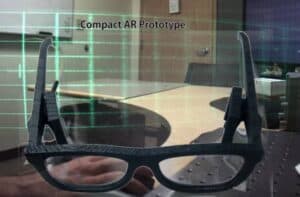

Microsoft’s prototype AR glasses

Here’s the details of what Microsoft calls an “admittedly crude prototype” from their Research Blog:

Last week at the SCIEN Workshop on Augmented and Mixed Reality, a group of industry and academic researchers met to discuss the future of virtual and mixed reality (VR/MR). One goal was clear: we’d all like to put on devices that look and feel like ordinary eyeglasses but can bring us to new places. We also want to maintain the full fidelity of human vision while experiencing VR/MR.

One of the greatest challenges to building near-eye displays is balancing the trade-off between the field of view and device form factor. A wide field of view is desirable so that we can see the whole scene at once; at the same time, we desire a lightweight, compact and aesthetically pleasing design that is both comfortable to wear all day and socially acceptable. Today we find these qualities only in isolation: sunglasses-like displays with very narrow fields of view (<20 degrees horizontal), or goggle- or helmet-sized displays with wide fields of view (>= 80 degrees horizontal). In our work, we created a simple prototype display with a sunglasses-like form factor, but a wide field of view (80 degrees horizontal), shown below.

Microsoft’s solution is to “play back” holograms in the eyewear and use software to reproduce the cues we natively use to judge focal depth. As the researchers note, “. . . most near-eye displays present all objects at the same focus.” But the reality is that we use out-of-focus objects to judge depth of field. Their idea is to render each aspect of an object at the correct focus pixel-by-pixel.

The result is AR glasses with an 80° field of view, native depth perception, and highly realistic images. And glasses that can correct for an individual’s astigmatism. How real will the AR world appear through this device? Try one pixel wide text that is sharply rendered.

A long road ahead

Needless to say, this isn’t a product you’ll see any time soon. The AR glasses in their demo are running off an external processor and power-source. But it’s a fascinating development and Mashable pulls out the ultimate goal from their research paper:

In future work, we plan to integrate all these capabilities into a single hardware device while expanding the exit pupil to create a practical stereo display. . . . In this way, we hope to become one step closer to truly mobile near-eye displays that match the range of capabilities of human vision.

There’s a lot more to come in augmented reality area this year. Microsoft has a head start with their years of work on HoloLens. Facebook and Google both have major AR platforms under development. Snap is already out the door with their fascinating – if limited – Spectacles. And Apple is hard at work in this area with the recent news of hiring NASA augmented reality expert Jeff Norris.

In the meantime, if you want more of the technical details on how we will get AR in our eyeglasses, here’s a video from the Microsoft Research team.

https://youtu.be/lN4tFV16mU8

Emory Craig is a writer, speaker, and consultant specializing in virtual reality (VR) and generative AI. With a rich background in art, new media, and higher education, he is a sought-after speaker at international conferences. Emory shares unique insights on innovation and collaborates with universities, nonprofits, businesses, and international organizations to develop transformative initiatives in XR, GenAI, and digital ethics. Passionate about harnessing the potential of cutting-edge technologies, he explores the ethical ramifications of blending the real with the virtual, sparking meaningful conversations about the future of human experience in an increasingly interconnected world.