The lightning-fast adoption of generative AI tools and their potential to shake the foundations of society has triggered a wave of urgent calls for guidelines and regulations. AI experts, industry leaders, and governments alike are advocating for some degree of regulation to manage the impact of AI on education, work, and society as a whole. The tech landscape has been transformed beyond recognition in just five months; now, imagine what the next five months will bring.

In a sense, the current AI scenario mirrors the response to the rapid expansion of the railroads in the late 19th century. Their disruptive power resulted in the first instance of government regulation of industry in the United States – the creation of the Interstate Commerce Commission in 1887. Dangerous working conditions weren’t the sole catalyst for government intervention; instead, it was the railroad’s capacity to make or break local economies and upend the lives of those who relied on it. With generative AI wielding even greater power to disrupt work, education, and politics, the growing chorus for regulation is hardly surprising.

As the saying goes, history repeats itself. Whether we’ll learn from past mistakes regarding over- or under-regulation remains uncertain. Let’s take a quick tour of the current AI climate, keeping in mind that the landscape is changing at breakneck speed.

Calls For A Pause In AI Development

Last month, we saw over a thousand AI leaders call for artificial intelligence labs to pause the development of more advanced systems, writing in an open letter that AI tools pose “profound risks to society and humanity.”

Signatories included Steve Wozniak, Apple’s co-founder; Elon Musk, originally a co-founder of OpenAI; Rachel Bronson, the president of the Bulletin of the Atomic Scientists; and many others. They cautioned that,

[AI developers are] . . . locked in an out-of-control race to develop and deploy ever more powerful digital minds that no one — not even their creators — can understand, predict or reliably control.

OpenAI’s CEO, Sam Altman, weighed in on the letter during a recent MIT event.

There’s parts of the thrust that I really agree with . . . We spent more than six months after we finished training GPT-4 before we released it, so taking the time to really study the safety of the model … to really try to understand what’s going on and mitigate as much as you can is important.

However, he noted that the letter “lacked technical nuance about where we need the pause.” Bill Gates, Google CEO Sundar Pichai, and others criticized the letter, arguing that a pause in AI development was impractical and ultimately impossible to enforce without government involvement.

Reid Hoffman, cofounder of Linkedin and friend of Elon Musk, has his own perspective on the letter, arguing that,

Elon tends to have a ‘I must build it with my own hands.’ You look at what amazing stuff he’s done with SpaceX and Tesla, and it has the kind of ‘It’s only great if I do it.’

But Hoffman also feels that a pause in AI development is the wrong approach:

It’s overall, I think, broadly a mistaken effort because actually I think everything we’ve seen so far in terms of development is the best ways to get safety is through larger scale models — they actually train to align better with human interests, and so the call to slow down is actually, in fact, less safe.

TLDR: Good intentions aside, a voluntary pause in AI development isn’t going to happen. That ship has sailed.

Italy Clashes with ChatGPT and Replika AI Chatbot

Last month, Italy made headlines as the first country to ban access to ChatGPT due to privacy concerns and the handling of personal data. It’s a move that OpenAI wasn’t prepared for, having not even opened an office in the EU at the time.

Italy’s Data Protection Agency (Garante per la protezione dei dati personali) has already taken action against Replika, the popular AI-powered personal chatbot created by CEO Eugenia Kuyda. Replika is designed to help you share your thoughts, feelings, beliefs, experiences, memories, and dreams – what Kuyda calls your “private perceptual world.” But Replika was also being used for erotic roleplay (ERP), and the Italian agency had concerns about its impact on minors and emotionally vulnerable people.

The agency noted that the AI Chatbot platform allowed users to signup with nothing more than their names, email addresses, and genders. But the app’s ability to influence users’ moods “may increase the risks for individuals still in a developmental stage or in a state of emotional fragility.” You can read the full decision in Italian or English here.

OpenAI took steps to restore ChatGPT access in Italy last week while Replika is still working on getting the ban lifted.

Concerns and Calls for Regulation By AI Leaders

There has been growing concern and calls for regulation by leaders in the field. Here are some key moments.

Sam Altman, CEO, Open AI

In a series of tweets in February, Sam Altman, CEO of OpenAI, speculated on the need for some government regulation.

We also need enough time for our institutions to figure out what to do. Regulation will be critical and will take time to figure out; although current-generation AI tools aren’t very scary, i think we are potentially not that far away from potentially scary ones.

Sundar Pichai, CEO of Google and Alphabet

More recently, Google’s Sundar Pichai argued in a CBS “60 Minutes” interview that guidelines and regulations are necessary to prevent AI abuse by bad actors. He gave the example of using AI to quickly create and release deepfake videos that could spread disinformation and create harm at a societal scale. He emphasized that a more diverse group than just AI experts in the tech industry should participate in the development of AI systems.

How do you develop AI systems that are aligned to human values, including morality? This is why I think the development of this needs to include not just engineers, but social scientists, ethicists, philosophers, and so on, and I think we have to be very thoughtful.

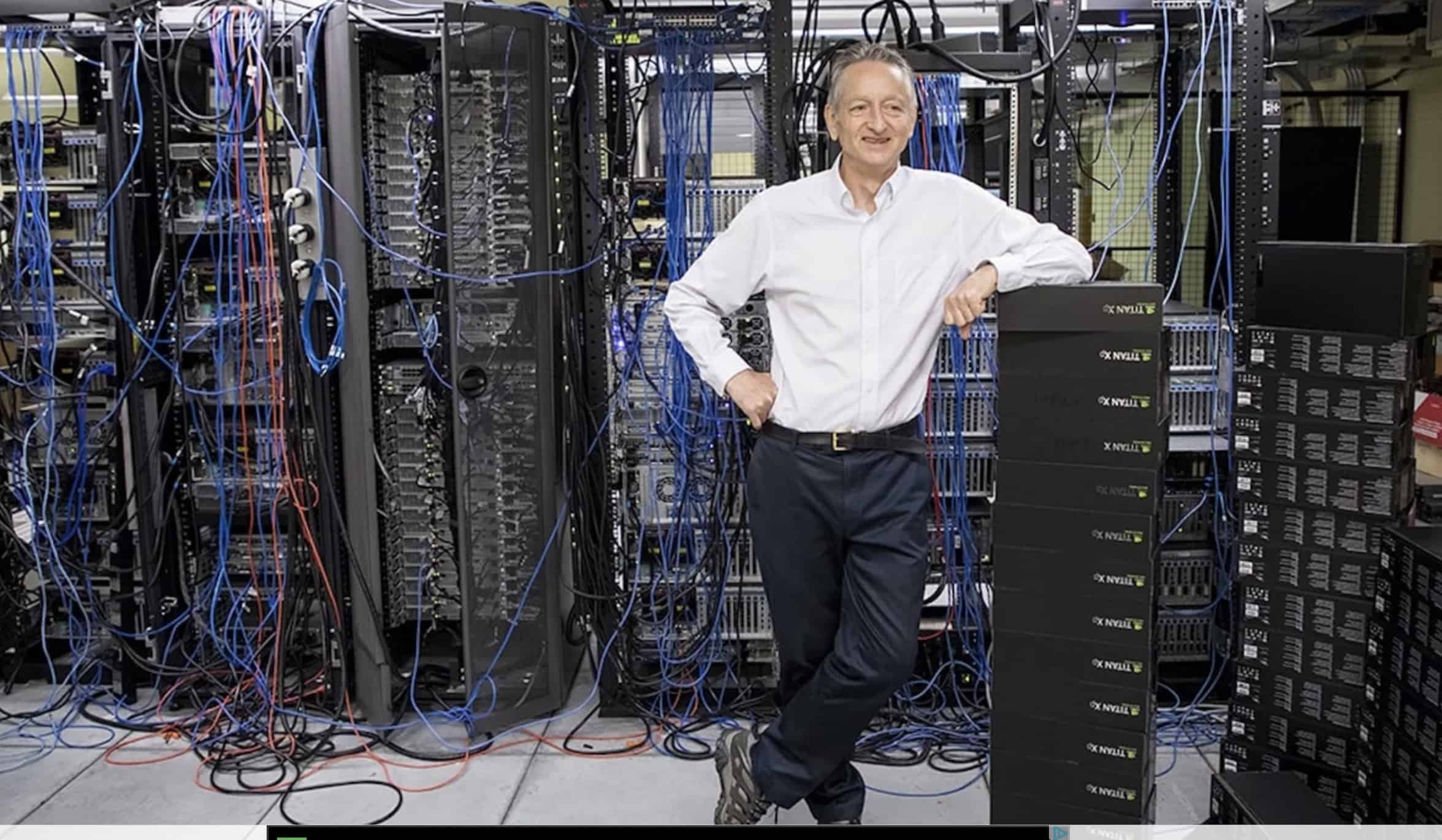

Geoffrey Hinton, AI Pioneer

And just this morning, we saw that artificial intelligence pioneer Geoffrey Hinton was stepping down from Google after working for half a century on AI developments.

Hinton has always been concerned about the ethical aspects of AI, basing his research projects in Canada, where funding is less tied to military contracts. But over the years, he’s become increasingly concerned about the power of AI to disrupt society.

As Cade Metz wrote today in the NY Times,

Dr. Hinton’s journey from A.I. groundbreaker to doomsayer marks a remarkable moment for the technology industry at perhaps its most important inflection point in decades. Industry leaders believe the new A.I. systems could be as important as the introduction of the web browser in the early 1990s and could lead to breakthroughs in areas ranging from drug research to education.

But gnawing at many industry insiders is a fear that they are releasing something dangerous into the wild. Generative A.I. can already be a tool for misinformation. Soon, it could be a risk to jobs. Somewhere down the line, tech’s biggest worriers say, it could be a risk to humanity.

Geoffrey Hinton summed up his feelings, looking at the near-term future.

Look at how it was five years ago and how it is now. Take the difference and propagate it forwards. That’s scary.

EU Lawmakers Work To Pass AI Legislation

EU lawmakers have been working on a new draft of the AI Act, first proposed in 2021. European Commission Executive Vice President Margrethe Vestager, the EU’s tech regulation chief, stated that the bloc anticipates a “political agreement this year” on AI legislation, which could include labeling requirements for AI-generated images or music to address copyright and educational risks. It’s a different approach than in the United States, where we are leaving copyright issues to the courts to decide.

The EU has been working toward a triple-layered approach — one to lay responsibilities across the full range of AI; a second to ensure that foundational models have guardrails; and a third to address content issues around generative AI models, such as OpenAI’s ChatGPT.

TechCrunch has a detailed analysis of the proposed regulatory framework and the debate over the timeline. There are concerns that if the Act doesn’t come into force until 2025, generative AI may already be beyond control.

Silicon Valley Wants A Carve-out

Not surprisingly, the major AI players in Silicon Valley have been lobbying the EU for a carve-out for generative AI, arguing that the framework should not apply to providers of large language models (LLM). In their view, those rules should only be applied downstream to those who deploy LLMs in ‘risky’ ways. So far, the EU doesn’t seem to be inclined to go along.

However, it looks fairly certain that the EU will require AI-driven chatbots to include some form of labeling so that they are not mistaken for actual human beings. That’s not a significant issue at the moment, though some Replika users do relate to the avatars on their phones as if they were actual people. But as our virtual environments become more sophisticated and realistic, it will become a significant issue.

G7 Nations Aim For “Risk-Based” Regulation

Lastly, the G7 nations (Canada, France, Germany, Italy, Japan, the United Kingdom, and the United States) recently agreed on a “risk-based” regulatory approach to foster responsible AI development. At a two-day meeting in Japan, digital ministers from the G7 economies discussed the necessity of preserving an “open and enabling environment” for AI development while ensuring it adheres to democratic values.

G7 ministers agreed to hold future discussions on generative AI, covering governance, intellectual property rights, transparency, and the fight against disinformation and foreign manipulation at the upcoming G7 Summit in Hiroshima. Those are all significant issues, but the EU will act long before the G7 gets beyond the discussion stage, given the long tradition of regulation in Europe.

The Self-Regulation Option

It’s not likely that the main AI players could come together to create a labeling framework – the AI space is too competitive. But TikTok is already rumored to be taking steps to do it on its own platform.

According to The Information, TikTok is developing a mechanism for content creators to label their work as AI-generated. However, when asked about the development of a self-labeling tool, TikTok told TheWrap:

We don’t have anything to announce at this time. At TikTok we believe that trust forms the foundation of our community — and we are always working to advance transparency and help viewers better understand the content they see.

Relying on trust is precisely what concerns so many when it comes to the potential dangers of generative AI.

The Need for AI Regulations: A Double-Edged Sword

The question of AI regulations and their extent is a complex one. On the one hand, regulations can stifle creativity and insights that can only come from cultural outliers. The risk of AI regulation is that it could permanently lock us into the cultural standards of our era.

It harkens back to the famous one-minute “Think Different” ad from Apple with its black-and-white footage of 17 iconic 20th-century personalities – all of whom were outsiders who changed the world. The creativity and insights of artists and others positioned at the faultlines of culture push society forward and underpin the battle for human rights.

On the other hand, AI is leading us into a fascinating yet unsettling realm of ubiquitous authorless text, erasing the lines between the real and the virtual. Unmoored from that anchor with reality, there are several arguments in favor of some degree of regulation:

- AI could perpetuate or even amplify existing biases and prejudices, leading to unfair treatment of certain groups of people in hiring, law enforcement, and other areas.

- AI systems could potentially violate user privacy leading to data breaches or unauthorized use of personal information.

- AI developments could accelerate economic disruption, leading to massive unemployment and social unrest.

- Advanced AI systems could be weaponized, both physically and digitally, posing severe threats to global security.

- Without regulation, we could see large tech companies and governments monopolizing AI research and development, leading to a massive imbalance of power and wealth.

- As we’ve seen with social media, bad actors could exploit unregulated AI for malicious purposes, such as spreading disinformation and manipulating public opinion.

These are just a few reasons why AI will require some degree of regulatory oversight. Striking a balance between freedom and the misuse of technology will be one of the most significant decisions of our decade.

As always, we’ll be watching this space closely as it impacts education, business, entertainment, and our interactions with one another. And in our deeply interconnected world, strong regulations by the EU will impact AI use in countries outside of Europe.

As always, we’ll keep a close eye on this space. The decisions on regulatory oversight will shape the future of AI and our world in profound ways.

Emory Craig is a writer, speaker, and consultant specializing in virtual reality (VR) and artificial intelligence (AI) with a rich background in art, new media, and higher education. A sought-after speaker at international conferences, he shares his unique insights on innovation and collaborates with universities, nonprofits, businesses, and international organizations to develop transformative initiatives in XR, AI, and digital ethics. Passionate about harnessing the potential of cutting-edge technologies, he explores the ethical ramifications of blending the real with the virtual, sparking meaningful conversations about the future of human experience in an increasingly interconnected world.