Thinking of setting up an AI ethics board? Google offered a quick lesson the past two weeks on how not to tackle the ethical questions in artificial intelligence. On March 26th, Google announced the formation of the Advanced Technology External Advisory Council (ATEAC).

This seems to be a fad in Silicon Valley these days. Companies move into AI, there’s growing public concern. Let’s create an ethics board. Problem solved.

This fails on so many levels – not least of which is that Google’s been working on AI for some time. What triggered the need to suddenly create a (not-very-well-thought-out) ethics board this spring? And stack it with controversial members?

Were more employee protests coming like last summer’s letter and resignations? Was Google just trying to appease all its critics as it becomes the public square of our digital lives? The latter role is not so bad – except when you’re a for-profit company.

We’ll never know.

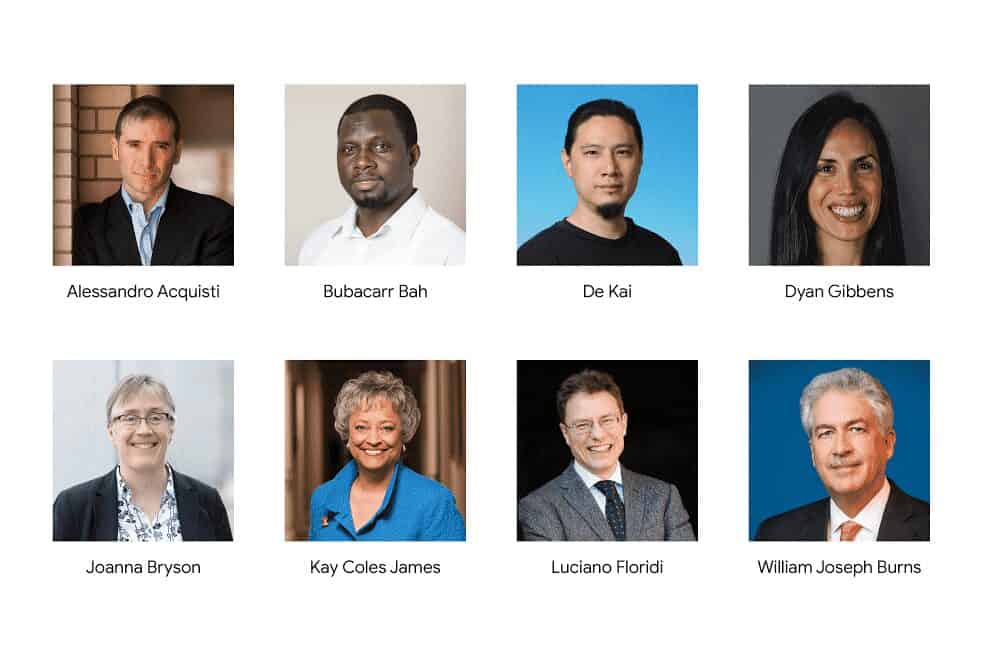

What we do know is that the board was to guide “responsible development of AI” at Google. That’s a tough order for eight unpaid people meeting four times a year in a company that employs 98,771. No matter, the board didn’t last more than a week. Controversy over the members, a petition, a resignation, and it was no more.

Yes, ethics is fleeting. Even more so when there’s no commitment.

Ironically, the board did get involved in an ethical dispute. Forget about AI – it was a debate over who should serve as members on the board.

AI Ethics Boards Are Not The Solution

Whatever Google may have had in mind, the board was never going to have an impact on Google’s work on AI. As Kelsey Piper argued in The Vox,

AI ethics boards like Google’s, which are in vogue in Silicon Valley, largely appear not to be equipped to solve, or even make progress on, hard questions about ethical AI progress.

A role on Google’s AI board was an unpaid, toothless position that cannot possibly, in four meetings over the course of a year, arrive at a clear understanding of everything Google is doing, let alone offer nuanced guidance on it. There are urgent ethical questions about the AI work Google is doing — and no real avenue by which the board could address them satisfactorily. From the start, it was badly designed for the goal.

As we’ve often said, programmers should be working side-by-side with ethicists. An ethics board is almost an ideal way to make sure that never actually happens.

The Challenges Raised by AI

One member of the now-canceled board summed up the current developments in AI. De Kai, a professor at the Department of Computer Science and Engineering at Hong Kong University of Science and Technology, argues that it’s not an incremental technology development.

AI is the single most disruptive force that humanity has ever encountered and my concern is that so much of the discussion that we hear about now is very incremental. We are near an era when people can easily produce weapons such as fleets of armed AI drones … the cat is out of the bag.

Speaking at a San Francisco town hall event earlier this year, Google’s CEO, Sundar Pichai, put it more directly:

AI is one of the most important things humanity is working on. It is more profound than . . . electricity or fire.

We couldn’t agree more. But it leaves something of a paradox. A development that important warrants nothing more than eight unpaid people meeting four times a year with no actual power. What exactly was an AI ethics board supposed to do (assuming they could agree to even meet)?

Too often, these boards only serve as a veneer, a superficial commitment that allows business to continue as usual. As the AI Now Institute wrote in a 2018 report:

Ethical codes may deflect criticism by acknowledging that problems exist, without ceding any power to regulate or transform the way technology is developed and applied. We have not seen strong oversight and accountability to backstop these ethical commitments.

Our Concern – AI and VR

Leaving aside the analogies with fire and electricity, or De Kai’s (admittedly scary) near-term vision of AI in weapons, our concerns go deeper. The obvious impact of artificial intelligence is one thing, but its concealed impact is far more troubling.

We’ve already seen the power of AR, VR, and Mixed Reality to create deeply engaging immersive environments. But what happens when AI and the virtual converge? When AI is embedded into our virtual environments and avatars?

We’ve seen the forerunner of this in Magic Leap’s Mica, an AI-driven avatar. Mica is not just a more realistic mixed reality avatar. She exists on a fundamentally different scale. Your senses are captivated and you relate to her as if she is a real person. There is a deep compulsion to interact with her . . . because she is interacting with you. And Mica doesn’t even talk yet.

It is always the convergence of technologies that is most powerful. And it’s up to us to learn how to handle the seduction of both our minds and our senses – simultaneously.

Otherwise, the cat’s most emphatically out of the bag. And no AI ethics board will ever coax it back in.

Emory Craig is a writer, speaker, and consultant specializing in virtual reality (VR) and artificial intelligence (AI) with a rich background in art, new media, and higher education. A sought-after speaker at international conferences, he shares his unique insights on innovation and collaborates with universities, nonprofits, businesses, and international organizations to develop transformative initiatives in XR, AI, and digital ethics. Passionate about harnessing the potential of cutting-edge technologies, he explores the ethical ramifications of blending the real with the virtual, sparking meaningful conversations about the future of human experience in an increasingly interconnected world.