There was no better way to wrap up the GDC 2019 conference than Valve Software’s Mike Ambinder talking about a brain-computer interface in VR. No need to worry about any immediate developments in BCI (as it’s known). It was a highly speculative talk.

We’re still trying to develop decent hand-controllers for our virtual environments. But Ambinder is thinking into the future, where we’ll be a few decades from now.

Brain-computer interface solutions raise profound ethical challenges – and not just in virtual reality. BCI could far surpass the power of VR to create immersive experiences. All our work to shoehorn miniature screens in plastic headset would seem so primitive if we could trick our brains into seeing what isn’t actually there.

But what concerns us is that the ethical issues are seldom raised at tech conferences. We’d feel much better if ethicists were working alongside tech developers – or at least if they were talking to each other. Instead, the groups silo themselves. One side waxes enthusiastically about the potential of technology; the other veers toward middling or dystopian visions of the future.

Neither conversation is particularly productive. Technology is more than a tool – it shapes our experience of ourselves and the world. That holds as much for the early railways at the beginning of the Industrial Revolution to the way virtual reality will reshape human experience. If we’re going to jack ourselves into the Matrix, we should at least explore the possibilities before we get there.

Or before there’s no way to unplug.

Mike Ambinder: A Brain-Computer Interface in VR

In his talk, Mike Ambinder is upfront that Value had no major BCI projects underway.

This is supposed to be speculative. This is one possible direction things could go. [Ars Technica]

But as he pointed out, we’re on the cusp of the journey into a brain-computer interface in VR. We can already track an array of responses in games, and eye-tracking is moving toward broader implementation. We expect the latter will be a standard feature in most high-end HMDs within two years.

At GDC 2019 last week, Tobii released an SDK and associated libraries for eye-tracking in VR. Having superhuman powers just through your gaze is utterly fascinating.

For now, we’ll have to content ourselves with more physiological data. And the only thing holding us back is money and the size of some of the tech.

As Ambinder says,

We can measure responses to in-game stimuli. And we’re not always getting [data] reliably, but we’re starting to figure out how. Think about what you’d want to know about your players. There’s a long list of things we can get right now with current technology, current generation analysis, and current generation experimentation.

Some of the data – heart rates, for example – could raise their own ethical issues. But taking it to the next step – a brain-computer interface in VR – won’t happen in the near future. There’s just too many hardware challenges in translating neuronic signals into something software could use in either gaming or virtual reality.

Even Larger VR Headsets?

Mike Ambinder joked that brain-computing interfaces in virtual reality might be a way to get people to love their bulky VR headsets. They might be willing to wear an even larger one. We know we’re not going to have virtual reality through minimal style goggles – even the size of Magic Leap – anytime soon.

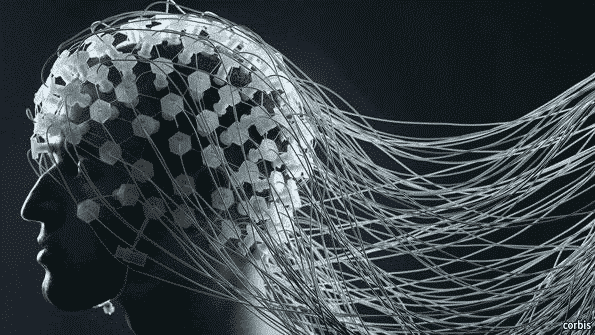

You can do limited EEG readings with smaller sensors – the Muse headband is a stylish approach to technology-enhanced meditation. But truly accurate readings require hardware that make you look like an alien. The only way to get all those sensors attached to your head would be some form of headgear.

VR helmets. I knew this would happen.

BCI Challenges

Neurable has been working on this for some time and demoed what they billed as the first mind-controlled game at SIGGRAPH 2017. But they only used a modified Vive headset – you’ll need a lot more sensors than that to make this work. As RoadtoVR notes,

Companies like Neurable have already begun productizing EEG devices especially built to work with VR devices, letting people “control software and devices using only their brain activity,” the company claims.

That said, EEG data isn’t a perfect solution. Ambinder compared it to sitting outside of a football stadium and trying to figure out what’s happening on the field just by listening to the intensity of the crowd’s reaction. The current generation of BCI devices are noisy, and EEG is one of the noisiest due to its job of picking up neuronal signals through the skull, scalp, and hair.

The football stadium is a good analogy of where we are now. But as technology progresses, brain-computer interfaces will learn how to separate out the signal from the noise.

And there will be both positive and negative implications.

Transforming Lives

For accessibility purposes, BCI would be remarkable both in and outside of VR. We’ve already seen incredible advances here. It was only in 2015 that researchers at the University of Houston developed an algorithm that allowed someone with a prosthetic hand to grasp an object like a water bottle just with their thoughts.

Think for a moment about what that means as a technological breakthrough. A prosthetic device controlled by human thoughts. In the same year, the press was all over Palmer Luckey’s breakthrough VR headset (pre-orders started January 6, 2016). It would help to keep our priorities in perspective.

Not surprisingly, Ambinder takes this much further, speculating on the ultimate enhancement of our senses.

Can we make you see in infrared like the Predator? Give you access to spatial location data, or even echolocation? Could we add a sense? Improve your sense of touch? Help you notice new frequencies?” Ambinder continued. “Taste and smell things you’ve never tasted or smelled? Focus your attention? Stimulate certain areas of your brain to recruit neurons for other tasks? Help you hold more memory at once? improve memory retrieval? [Ars Technica]

Developments like that would transform the lives of people with disabilities. And it would impact far more than gaming; it would revolutionize our immersive experiences in VR. We’d have superhuman experiences in virtual environments and easily interact with AI-driven beings like Magic Leap’s Mica.

Welcome to the Matrix.

Privacy and Hacking of the Self

As we noted, tech conferences downplay the ethical challenges. No one wants to undercut what they’re developing – or selling. Ambinder mentioned a few of them in passing but the stakes seem much higher when you step outside the tech bubble.

Consider just the privacy issues described in GE Reports for brain-computer interfaces:

The potential ability to determine individuals’ preferences and personal information using their own brain signals has spawned a number of difficult but pressing questions: Should we be able to keep our neural signals private? That is, should neural security be a human right? How do we adequately protect and store all the neural data being recorded for research, and soon for leisure? How do consumers know if any protective or anonymization measures are being made with their neural data? As of now, neural data collected for commercial uses are not subject to the same legal protections covering biomedical research or health care. Should neural data be treated differently?

Right now, we haven’t come close to resolving the privacy challenges of our personal data in social media. In the future, the privacy of our neural data could become a fundamental issue. When you look at what happened to the Norwegian company Nord Hydro last week (servers hacked, data encrypted), try to imagine parallel developments on a personal level.

Today, only technology devices get hacked. But it’s not that far-fetched to think of a BCI-dependent future where individuals are hacked. You can always unplug, you say? That only works until a hacker asks for a price in return.

Time for a Conversation on the Ethical Issues

You won’t see brain-computer interfaces in VR and other technologies in the next few years. Given the challenges Valve seems to have in even releasing a VR headset, they may be the last to jack into our brains.

But BCI developments are swirling around us. DARPA is funding partnerships between companies and universities that could result in significant breakthroughs in brain disorders. Elon Musk has created his own company, Neuralink, to work toward the same goal and move us closer to a form of “consensual telepathy” communication.

Mike Ambinder’s talk might have been completely “speculative”, but it’s time to take the developments seriously. If we’re not careful, BCI will hit us like a tsunami that overwhelms the most fundamental elements of human experience.

For us, it’s not about unbridled optimism or despair. It’s about instigating a conversation with multiple perspectives. At the very least, we owe that to those who will inherit the future we are creating.

Emory Craig is a writer, speaker, and consultant specializing in virtual reality (VR) and artificial intelligence (AI) with a rich background in art, new media, and higher education. A sought-after speaker at international conferences, he shares his unique insights on innovation and collaborates with universities, nonprofits, businesses, and international organizations to develop transformative initiatives in XR, AI, and digital ethics. Passionate about harnessing the potential of cutting-edge technologies, he explores the ethical ramifications of blending the real with the virtual, sparking meaningful conversations about the future of human experience in an increasingly interconnected world.