Mind Controlled VR sounds like a Sci Fi world – but it’s where we’re headed. According to GMA News Online, there’s a fascinating project at the University of Washington’s Computer Engineering Department. You won’t be playing in a mind controlled VR environment next year, but a decade or two, you may navigate your tech interfaces through your brain waves.

How mind controlled VR works

The UW project refers to the process as Transcranial Magnetic Stimulation (or TMS), which sounds a bit like a massage in a future Star Trek movie. Here’s a quick description of their brain-computer interface (BCI):

https://www.youtube.com/watch?v=tuUA5UDoxQM

According to Rajesh Rao, Professor of Computer Science & Engineering, they are working on non-invasive ways of stimulating the brain:

The fundamental question that we were asking here was Is it possible to send information from artificial sensors or computer-created worlds directly into the brains so that the brain can start to understand that information and make use of it to solve a task?

Right now, the process is rudimentary, letting participants only navigate simple mazes with input only from brain stimulation.

When the TMS machine sends a signal to the test subject’s brain, it is perceived as a very brief flash of light, known as a phosphene. The person being tested is then asked to navigate through a simple maze, moving forward or down, depending on whether they saw a phosphene or not. A researcher controls the experiment, choosing from a variety of different maze options.

There are other projects underway including one at the University of British Columbia and at Cornell University – where researchers designed a Brain-Computer Interface (BCI) and using an EEG reader, were able to play Pong through brain waves. One of the longest running projects is BrainGate with a decade of research at Brown University. It differs from the UW project as it relies on an invasive procedure. As MIT Technology Review notes, it’s making remarkable strides in helping the disabled use technology and perform daily tasks.

Time to axe the mouse paradigm?

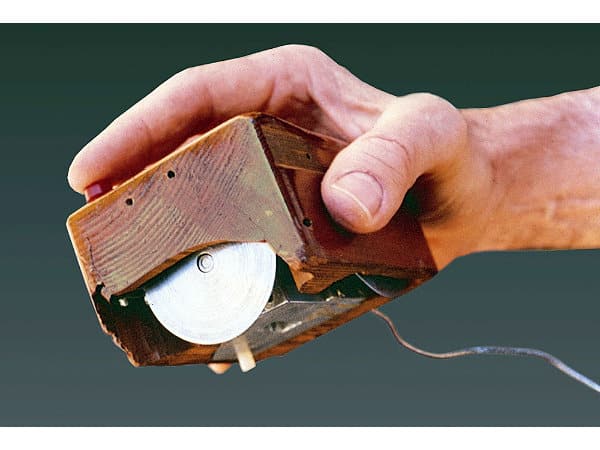

BCI projects have far-reaching implications for our basic interaction with technology. For decades, we’ve relied on the paradigm defined by Douglas Engelbart’s mouse. We control computers with our hands. There’s been elegant refinements by Steve Jobs and others, and of course we’ve moved on to trackpads.

Two years ago there was a rush of experiments with wearable tracking rings, but the mouse and trackpad have endured. Despite all the advances in the Digital Age, we still use our hands to navigate our computer interfaces.

Voice control is making inroads, especially in our mobile devices. But do a quick survey of the smartphone users around you now – the majority are still tapping their screens, not talking to them.

VR Interfaces

As effective as the mouse-hand paradigm has been, it fails in virtual reality. When it comes to VR, most of the interfaces are a lesson in patience. They’re not precise, latency remains an issue and hand controllers seem more designed for gameplay.

One of the most remarkable efforts to solve this has been Intel’s Project Alloy. Project Alloy mixes the real and virtual, putting your real hands instead of a controller in virtual reality. What Intel likes to call Merged Reality. Their demo video is remarkable, and we’re hoping for at least a developers release in 2017. But even here, our hands will need more precise renderings in the virtual environment.

How long before we get there?

Clearly, no one is going to wear the rig at the University of Washington to navigate through games or an interface. It sort of reminds you of Ivan Sutherland’s huge early VR headset known as the “Sword of Damocles“. But as with all hardware, size shrinks and sensors become more accurate.

A good guide for how BCI will progress is to look at the current EEG readers. As The Verge notes, these systems don’t actually read your thoughts, but connect neuronal patterns with your actions. Yet go back twenty years, and these were large machines in hospitals, not off-the-shelf consumer technology.

You won’t see sophisticated brain-computer interfaces in the short-term. But the research at the University of Washington is bringing mind controlled VR a major step closer to reality.

Emory Craig is a writer, speaker, and consultant specializing in virtual reality (VR) and artificial intelligence (AI) with a rich background in art, new media, and higher education. A sought-after speaker at international conferences, he shares his unique insights on innovation and collaborates with universities, nonprofits, businesses, and international organizations to develop transformative initiatives in XR, AI, and digital ethics. Passionate about harnessing the potential of cutting-edge technologies, he explores the ethical ramifications of blending the real with the virtual, sparking meaningful conversations about the future of human experience in an increasingly interconnected world.